In the ever-evolving world of cloud-native solutions, it can be a daunting task to maintain message brokers. For a while, our team was responsible for a self-managed RabbitMQ instance. While this worked well initially, we encountered challenges in terms of maintenance, version updates, and data recovery. This led us to explore Amazon MQ, a fully managed message broker service offered by AWS.

In this article, we’ll discuss the advantages of both self-managed RabbitMQ and Amazon MQ, the reasons behind our migration, and the hurdles we faced during the transition. Our journey offers insights for other developers, who consider a similar migration path.

The Self-Managed RabbitMQ Era

Our experience with self-managed RabbitMQ was characterized by control, high availability, and the responsibility to ensure data integrity. Here are some of the advantages of this approach:

- Total Control

Running your own RabbitMQ server gives you complete control over configuration, security, and updates. You can fine-tune the setup to meet your specific requirements: ideal for organizations with complex or unique messaging needs. - High Availability

It’s worth noting that our entity was running on AWS EC2, whose SLA guarantees only 99.99%, but de-facto we achieved a remarkable uptime rate of 99.999% with our self-managed RabbitMQ setup. The downtime was almost non-existent, which ensured a reliable message flow through our system. High availability is crucial for many mission-critical applications. - Data Recovery

Ironically, data recovery was a challenge with our self-managed RabbitMQ. In the event of a crash, we lacked confidence in our ability to restore data fully. This vulnerability urged us to consider Amazon MQ, a fully managed solution.

The Shift to Amazon MQ

As time passed, it became apparent that managing RabbitMQ was no longer sustainable for our team. Here are the primary reasons that drove us to explore Amazon MQ as an alternative:

- Skills Gap

Our team lacked in-house experts dedicated to managing RabbitMQ, which posed a risk to our operations. As RabbitMQ versions evolved, staying up-to-date became increasingly challenging. This skill gap urged us to consider Amazon MQ, a fully managed solution. - AWS Integration

As an AWS service, Amazon MQ seamlessly integrated with our existing AWS infrastructure, providing us with a more cohesive and consistent cloud environment. It allowed us to leverage existing AWS services and tools, which resulted in a smooth migration process. - Managed Service

The promise of offloading the operational burden to AWS was enticing. Amazon MQ handles tasks like patching, maintenance, and scaling. This allows our team to focus on more strategic initiatives. - Enhanced Security

One key advantage of switching to AmazonMQ is its strong foundation on AWS infrastructure. This not only ensures robust security practices but also regular updates are integrated into the system. So, it gives us confidence, as we know that any potential vulnerabilities are under active monitoring and management.

The Amazon MQ Experience

While the move to Amazon MQ presented numerous benefits, we also encountered some challenges that are worth noting:

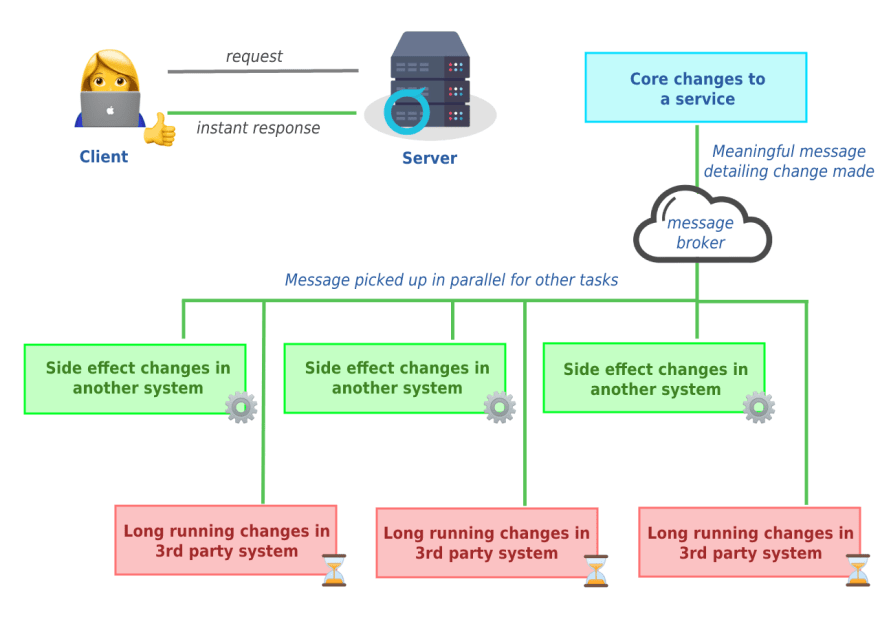

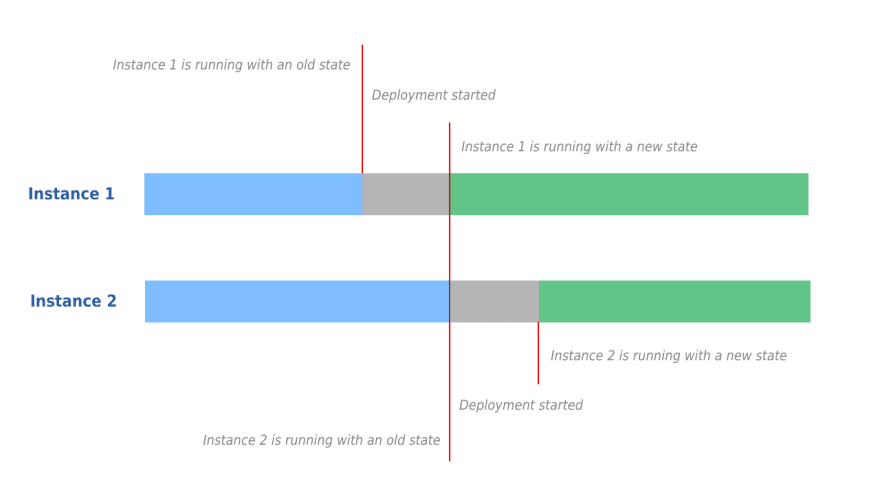

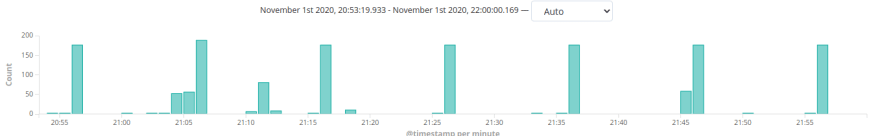

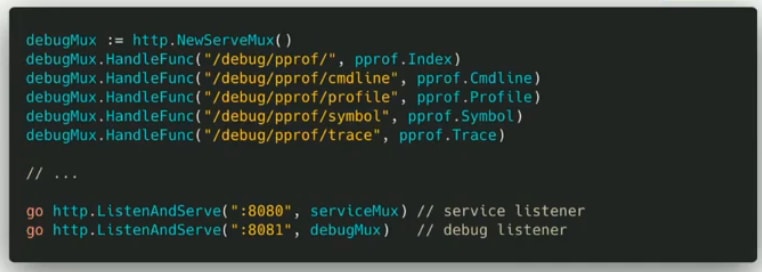

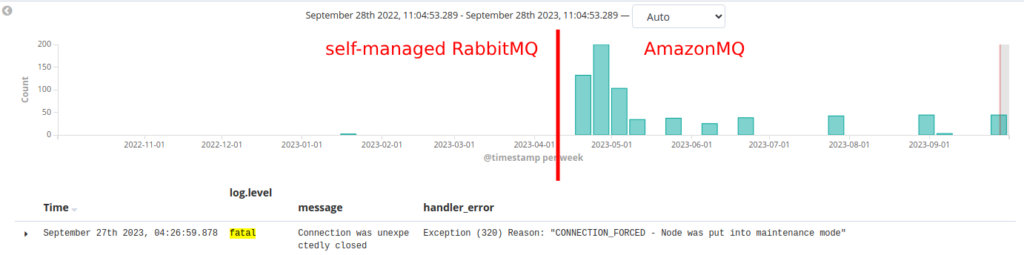

Owned by the author

Owned by the author- SLA Guarantees

Amazon MQ’s service level agreement (SLA) guarantees 99.9% availability. This is generally acceptable for many businesses but was a step down from our self-managed RabbitMQ’s 99.999% uptime. While the difference might seem small, it translated into more downtime. A trade-off we had to accept. - Limited Configuration

Amazon MQ abstracts many configuration details. This simplifies management for the most users. However, this simplicity comes at the cost of fine-grained control. For organizations with highly specialized requirements, this might be a drawback. - Cost Considerations

Amazon MQ is a managed service, which means there are associated costs. While the managed service helps reduce operational overhead, it’s crucial to factor in the cost implications when migrating.

What do three nines (99.9) really mean?

Here are my calculations according to Amazon MQ SLA:

- if the monthly downtime is lower than ~43 minutes, they will charge 100% of the costs

- if the monthly downtime is between ~43 minutes to ~7 hours, they will charge 90% of the costs of this downtime

- if the monthly downtime is between ~7 hours to ~1day, they will charge 75% of the costs of this downtime

- and if the monthly downtime is higher than ~1 day, they won’t charge any costs for this downtime

Conclusion

Our migration from self-managed RabbitMQ to Amazon MQ represented a shift in the way we approach message brokers. While Amazon MQ offered many benefits, such as reduced operational burden and seamless AWS integration, it came with some trade-offs, including a lower SLA guarantee and less granular control.

Ultimately, the decision to migrate should be based on your organization’s specific needs, resources, and objectives. For us, the trade-offs were acceptable given the advantages of a managed service within our AWS ecosystem.

The path to a cloud-native solution isn’t always straightforward, but it can lead to more streamlined operations and a greater focus on innovation rather than infrastructure management. Understanding the pros and cons of both approaches is vital for an informed decision about your messaging infrastructure.

As technology continues to evolve, it’s essential to stay adaptable and leverage the right tools and services to meet your business needs. In our case, the migration to Amazon MQ allowed us to do just that.