In the world of teamwork, our daily stand-ups play a crucial role in our collective success. We wanted to make things more efficient, and that’s when we found something awesome in the videos from “Development That Pays“: Walking the Board.

The Problem

We’ve all been there: the endless cycle of daily stand-ups where the team updates on what they did yesterday, what they’re working on today, and any blockers they’re facing.

While it seems like a straightforward approach, it has its drawbacks. The individual spotlight often overshadows the collaborative nature of the team event, with team members more focused on crafting their own stories than actively listening to others. This realization prompted us to seek a more effective alternative.

The Discovery

While browsing YouTube, I discovered “Development That Pays”. It proved to be a goldmine of helpful videos. Two videos, in particular, caught my attention: Daily Stand-up: You’re Doing It Wrong! and Agile Daily Standup – How To Walk the Board (aka Walk the Wall). These videos challenged our traditional approach and introduced an alternative — Walking the Board.

The “Walking the Board” Concept

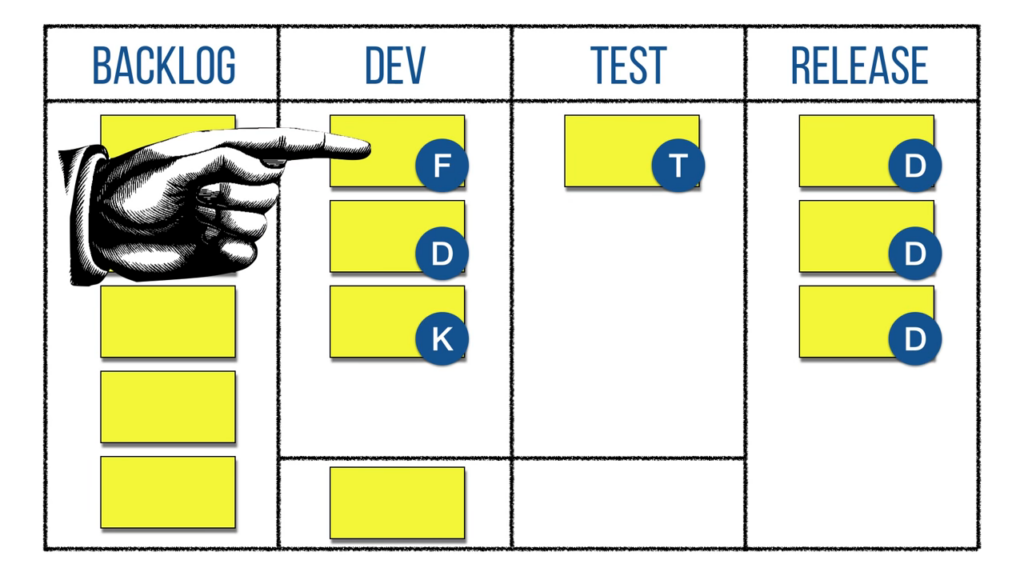

Walking the Board, also known as Walking the Wall, offered a refreshing perspective. Instead of individual updates, the team starts with the ticket on the top right of the board. The team member assigned to that ticket provides an update, and we move on to the next ticket. This method shifts the focus from individuals to the work itself and changes the stand-up from a chain of individual updates to a team event.

This approach transforms the stand-up into a collaborative journey across the board, ensuring that the spotlight remains on the work itself, not on individual narratives. No more struggling to come up with a good story, the cards on the board provide the agenda. It’s a game-changer that keeps everyone focused on the work. It’s a shift from “What did I do?” to “What is the status of the work on the board?“

Owned by the author

Owned by the authorHow To Walk the Board

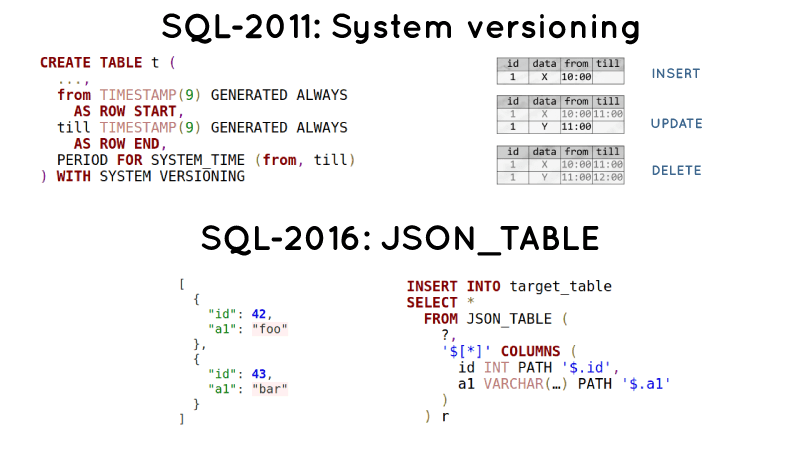

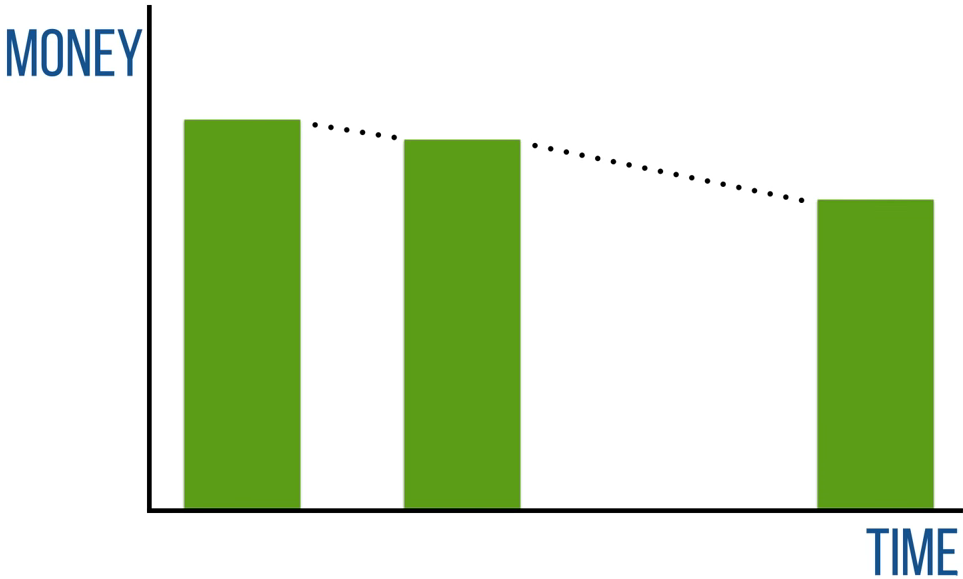

Starting at the top right of the board makes financial sense — the items closest to being live are discussed first. If you’re familiar with the concept of net present value, you’ll understand that income now is more valuable than income later, and income tomorrow is more valuable than income next week.

Owned by the author

Owned by the authorThe second reason for starting at the right is purely practical: we are going to move the cards across the board from left to right. By starting at the right, we create space for cards to move into.

Also, the “Development That Pays” video emphasized the importance of moments of glory — allowing team members to move their own cards and take pride in their progress. It’s not just about updating the board; it’s about actively participating in the collective journey.

At the end of the meeting, the board and the team’s understanding of its current “shape are up to date. Team members can also share non-board related topics or updates, fo example tasks not listed on the board.

Success Story: Implementing Walking the Board

Inspired by these videos, we decided to give “Walking the Board” a shot. The transformation was remarkable! Our daily stand-ups became more than just updates — they turned into collaborative sessions centered around the work on the board.

ELMO Rule

To keep discussions concise, we introduced the “ELMO” rule. ELMO stands for Enough, Let’s Move On. “ELMO” is a word that the guide and travelers may use to indicate a conversation is either off-topic or takes too long. If a discussion is going off track, anyone can simply say “ELMO”. This signals that we’ll discuss it later, outside our daily stand-up.

Owned by the author

Owned by the authorSecret Order

We established a specific order for leading the board and other meetings. This system not only enhances a sense of responsibility but also encourages shared leadership among the team.

The Result and Summary

Since adopting Walking the Board in the summer of 2020, our meetings have changed a lot. We have shifted away from giving individual updates. Instead, our focus is entirely on the work on the board. This change has made our stand-ups more productive and collaborative, as we’re now centered on the tasks at hand rather than individual narratives.

We switched from traditional stand-ups to “Walking the Board” because we wanted our meetings to be more efficient. The videos from “Development That Pays” played a key role in inspiring this change, showing us what wasn’t working with the old way and the benefits of a more team-focused approach. Now, Walking the Board is a regular part of our daily routine, making our meetings more focused, productive, and collaborative. If you’re looking to improve your stand-ups, the insights from “Development That Pays” are definitely worth exploring.