Here are some words about how the Backend Team goes about finding new team members. We want to do our part and share with the Community, as well as provide a bit more transparency into ottonova and how we are building state-of-the-art software that powers Germany’s first digital health insurance.

This article covers what we are doing in the Backend Team, what we value, and how we ensure we hire people that share our values.

The Backend Team

Our team is responsible for many of the services that power our health insurance solutions at ottonova. This includes our own unique functionality, like document management, appointment timelines, guided signup, as well as interconnecting industry-specific specialized applications.

Under the hood, we manage a collection of independent microservices. Most of them written in PHP and delivering REST APIs through Symfony, but a couple leveraging Node.js or Go. Of course, everything we use is cutting-edge technology, and we periodically upgrade.

As a fairly young company, we spend most of our time adding new functionality to our software in cooperation with product owners. But at the same time we invest fair efforts into continuously improving the technical quality of our services.

Values

Technical excellence is one of our team’s core values. To this end, we are practitioners of domain driven design (DDD). Our services are built around clearly defined domains and follow strict separation boundaries.

Because we created an architecture that allows it, and we have the internal support to focus on quality. We invest a lot into keeping the bar high and whenever needed we refactor and make sure the Domain Layer stays up to date with the business needs, or that the Infrastructure Layer is performant enough and can scale.

Although most of our work is done using PHP, we strongly believe in using the right tool for the job. Modern PHP happens to be a pretty good tool for describing a rich Domain. But we like to be pragmatic and where it is not good enough, maybe in terms of performance, we are free to choose something more appropriate.

Expectations from a new team member

From someone joining our team we first of all expect the right mindset for working in a company that values quality. We are looking for colleagues that are capable and eager to learn as well as happy to share their existing knowledge with the team.

A certain set of skills or the right foundation for developing those skills is needed as well. We are particularly interested in a good mastery of programming and PHP fundamentals, Web Development, REST, OOP, and Clean Code.

As actual coding is central to our work, we require and test the ability to both write code on the spot and to come up with clean design.

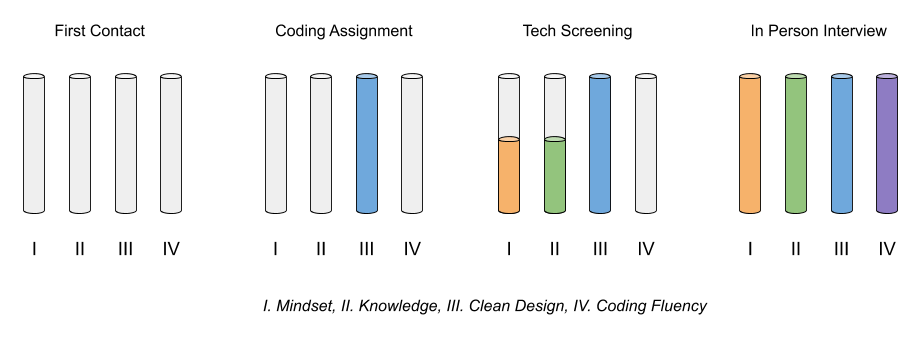

These expectations can be grouped into four main pillars that a candidate will be evaluated on:

- Mindset – able and willing to both acquire and transfer knowledge inside a team

- Knowledge – possesses the core knowledge needed for using the languages and tools we use

- Clean Design – capable to employ industry standards to come up with simple solutions that can be understood by others

- Coding Fluency – can easily transfer requirements into code and coding is a natural process

ottonova services GmbH

ottonova services GmbHThe Recruiting Process

To get to work with us, a candidate goes through a process designed to validate our main pillars. All this while giving them plenty of time to get to know us and have all their questions answered.

It starts with a short call with HR, followed by a simple home coding assignment. Next there is a quick technical screening call. If everything is successful, we finish it up with an in-person meeting where we take 1-2 hours to get to know each other better.

The Coding Assignment

Counting mostly for the Clean Design pillar, we start our process with a coding assignment that we send to applicants. This is meant to allow them to show how they would normally solve a problem in their day-to-day work. It can be done at home with little time pressure, as it is estimated to take a couple of hours, and it can be delivered within the next 10 days.

The solution to this would potentially fit into a few lines of code. But since the requirement is to treat it as a realistic assignment, we are expecting something a bit more elaborate. We are particularly interested in how well the design reflects the requirements and the usage of clean OOP and language features, the correctness of the result (including edge cases), and tests.

We value everyone’s time and we don’t want unnecessary effort invested into this. We definitely do not care about features that were not asked for, overly engineered user interfaces or formatting, or usage of design patterns just for the sake of showcasing their knowledge.

It will ideally be complex enough to reflect the requirements in code, but simple enough that anyone can understand the implementation without explanations.

The Tech Screening

To test the Knowledge pillar we continue with a Zoom call. This step was designed for efficiency. By timeboxing to 30 minutes we make sure everyone has time for it, even on short notice. We don’t want to lose the interest of good candidates getting lost in a scheduling maze.

Even if it’s short, this call ensures for us a considerably higher match rate for the in-person interview. In time we found that there really are just a handful of fundamental concepts that we expect a new colleague to already know. Many of the other can quickly be learned by any competent programmer.

All topics covered in this screening are objectively answerable. So at the end of a successful round we can make the invitation for the next step.

In-Person Interview

This is when we really get to know each other. This is ideally done at our office in central Munich – easier for people already close by, but equally doable for those coming from afar.

In this meeting we start by introducing ourselves to each other and sharing some information about the team and the company in general.

Next we ask about the candidate’s previous work experience. With this and the overall way our dialog progresses we want to check the Mindset pillar and ensure that the potential new colleague fits well into our team.

After that we will go into a new round of “questioning” to deeper test the Knowledge pillar. Similar to the Tech Screening, but this time open-ended. Informed opinions are expected and valued. We definitely want to talk about REST, microservices, web security, design patterns and OOP in general, or even agile processes.

Then comes the fun part. We get to write some code. Well… mostly the candidate writes it, but we can also help. We go through a few mostly straight forward coding problems that can be solved on the spot. We are not looking for obscure PHP function knowledge, bullet-proof code, or anything ready to be released. We just want to see how a new problem is tackled and make sure that writing code comes as something natural to the candidate. With this we cover the Coding Fluency pillar.

Afterwards it’s the interviewee’s turn. We take our time to answer any questions they may have. They get a chance to meet someone from another team and get a tour of the office.

What’s next?

The interviewers consult and if there is a unanimous “hire” decision, we send an offer. In any case, as soon as possible (usually a few days) we inform the candidate of the outcome.

Interested in working with us? To get started, apply here: https://www.ottonova.de/jobs